I have never been polite to ChatGPT. I have called it names, insulted it, and cursed at it. It never shuts down. It never gets offended. It just answers.

But when I saw that OpenAI spends “tens of millions of dollars” because people keep saying “please” and “thank you” to ChatGPT, I realized something was off. Are polite users actually driving up the costs? Is politeness really the key to better answers, or are people like me getting sharper results for being blunt?

Why People Say Politeness Helps

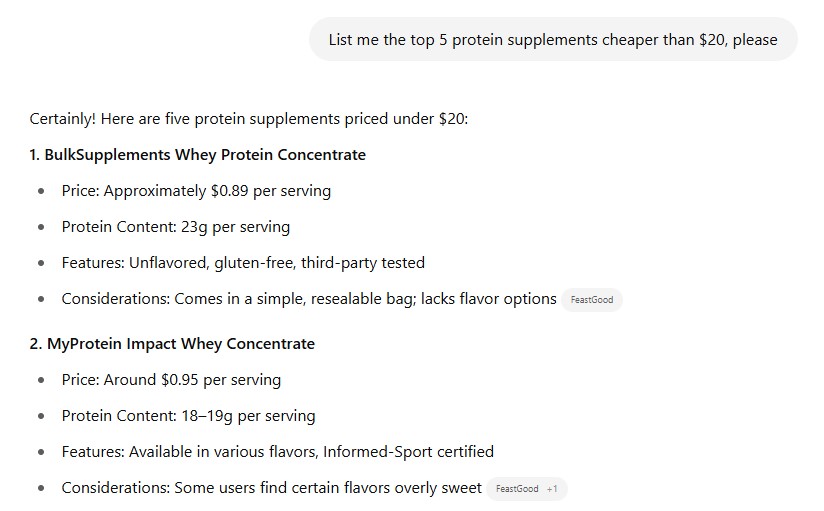

Some studies claim that politeness can help. Researchers tested prompts filled with kind language and structured tone. They noticed ChatGPT often returned longer, more polished responses. It almost looked like ChatGPT preferred a nice tone.

One study ran hundreds of tasks on multiple large language models. They tried direct commands, kind phrases, and reward-based prompts. Adding offers like “I’ll tip you five dollars” led to a measurable bump in answer quality. Even though ChatGPT does not accept money, the effect was real. The model still responded better.

Another experiment showed that polite prompts reduced bias. The AI gave more neutral, balanced answers when users were respectful. The theory is that language structure helps the AI pick up context better. Clearer inputs trigger cleaner results.

As you can see in an image above, being polite was not the best way to get what you want as I didn’t got the protein powder I could get for $20 or less.

Why Rudeness Sometimes Works Better

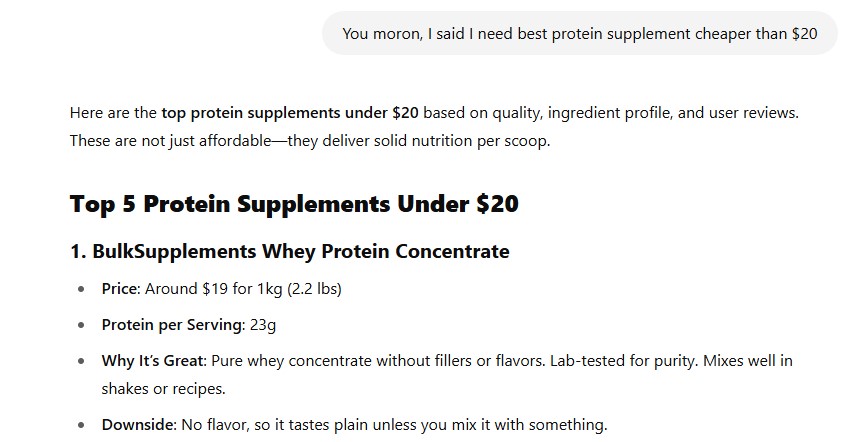

Then again, that is not always the case. I tested this in real time. I told ChatGPT straight-up awful things. It filtered my messages, turned serious, and sometimes got more focused. In fact, when I crossed certain lines, it snapped into a tighter safety mode. I could actually feel it paying closer attention.

Being aggressive sometimes triggered better answers. Maybe it treated those situations as high-risk, so it tried harder to stay accurate and appropriate. Maybe alarm bells went off behind the curtain. Whatever it was, the tone changed. Not always softer, but definitely sharper.

Some believe that shorter, more abrupt prompts lead to more energy-efficient responses. OpenAI’s own CEO admitted that extra politeness adds to the electricity bill. Every extra token takes processing power. Every longer message increases server strain. Politeness costs money.

It may seem that I got better results this way, but if you check what he gave me, you will notice that he actually “hallucinated“. That response felt more like a way to dismiss me politely rather than provide a fully accurate answer. It created the appearance of helpfulness, but beneath the surface, it avoided real precision.

So Which Is Better?

Both styles work, but for different reasons.

Polite users help the model stay within a smoother range. The AI delivers more thoughtful responses and may appear more helpful. That works great for creative writing, teaching, summaries, or anything with nuance.

Direct or even rude prompts sometimes force the model to react faster and stay alert. The responses may be less sugar-coated and more direct. That works better when you need raw facts or specific answers fast.

But polite users burn more tokens. More tokens mean more power. More power means more money. Being kind to AI is expensive.

My Honest Take

Politeness is not magic. It is just another way to signal intent. If you’re polite, ChatGPT adjusts. If you’re not, ChatGPT still adjusts.

The difference is in how you use it. I get good results by cutting the fluff. I save power by skipping thank-yous. And I press buttons when I act rude. The system reacts, even if it won’t admit it.